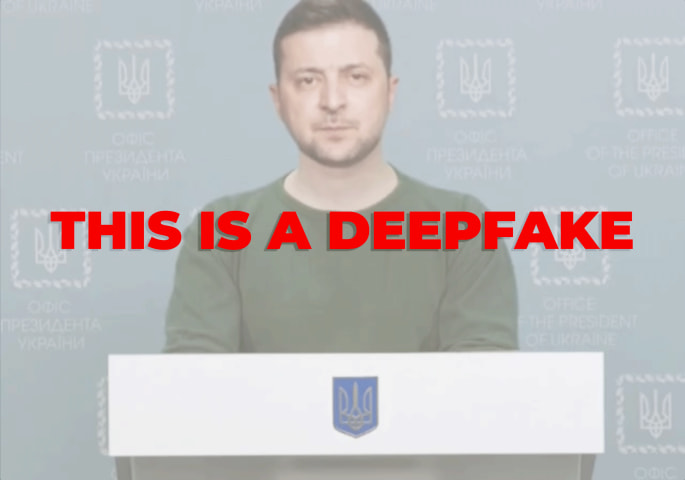

概要: ロシアと関係のある組織が、モルドバのEU加盟を問う国民投票に影響を及ぼす目的で、AI生成の画像や動画を用いて偽情報を拡散したとされている。AIを活用したこのメディアキャンペーンには、捏造されたストーリーや加工された映像が含まれており、国民投票直前の数日間に恐怖を煽り、EU支持の感情を弱めることが目的だったと報じられている。

Editor Notes: Reconstructing some of the timeline of events: (1) September 18, 2024: Microsoft’s Threat Analysis Center (MTAC) publicly acknowledges ongoing monitoring of Russian disinformation efforts in Moldova, mentioning that it has collaborated with the Moldovan government to defend against influence operations. Russia’s tactics reportedly include AI-generated media, cyberattacks, and inauthentic social media accounts aimed at amplifying pro-Kremlin narratives and undermining Moldova’s pro-E.U. sentiment. (2) October 1, 2024: A manipulated video surfaced online that is reported to have falsely depicted Dumitru Alaiba, Moldova's Minister of Economic Development and Digitalization, in compromising situations. Alaiba denounced the video as a "poor quality fake" and filed a police complaint to address the disinformation. (See Incident 841.) (3) October 7, 2024: Fake Ministry of Culture letters appeared, circulated by dubious social media accounts, falsely claiming Moldova would host an “LGBT festival” under E.U. influence. (4) October 17, 2024: Moldovan officials, with support from social media companies, reportedly began taking action against disinformation, with Facebook removing numerous fake accounts, groups, and pages. (5) October 17, 2024: U.S. Senator Benjamin Cardin urged Meta and Alphabet executives to better enforce anti-disinformation measures in Moldova. (6) October 20, 2024: Telegram suspended Ilan Shor’s “Stop EU” channel for violating local laws. (7) Around October 22, 2024: With the referendum nearing, Moldovan police continued monitoring and dismantling disinformation efforts while also citing Russian influence in a last-minute push against pro-E.U. messaging.

Alleged: Unknown AI developers developed an AI system deployed by Russia-backed influencers , Maria Zakharova , Ilan Shor と Government of Russia, which harmed Pro-EU Moldovans , Moldovan general public , Maia Sandu , Government of Moldova , Electoral integrity , Democracy と Dumitru Alaiba.

インシデントのステータス

Risk Subdomain

A further 23 subdomains create an accessible and understandable classification of hazards and harms associated with AI

4.1. Disinformation, surveillance, and influence at scale

Risk Domain

The Domain Taxonomy of AI Risks classifies risks into seven AI risk domains: (1) Discrimination & toxicity, (2) Privacy & security, (3) Misinformation, (4) Malicious actors & misuse, (5) Human-computer interaction, (6) Socioeconomic & environmental harms, and (7) AI system safety, failures & limitations.

- Malicious Actors & Misuse

Entity

Which, if any, entity is presented as the main cause of the risk

Human

Timing

The stage in the AI lifecycle at which the risk is presented as occurring

Post-deployment

Intent

Whether the risk is presented as occurring as an expected or unexpected outcome from pursuing a goal

Intentional

インシデントレポート

レポートタイムライン

Loading...

欧州連合(EU)に加盟し、ロシアの勢力圏から離脱することを約束している政府によって任命されたモルドバの警察署長は、自国の首都に突然「EU反対」という率直なメッセージが書かれたポスターが貼られているのを見て驚いた。

このポスターは、モルドバの主要言語であるロシア語とルーマニア語で書かれており、先月キシナウのバス停に一夜にして現れた。表面上は、ウクライナ出身の人気ロシア語歌手のコンサートの宣伝キャンペーンの一環だ。

しかし、タイミングが警鐘を鳴らした。EU反対のメッセージが届いた…

バリアント

「バリアント」は既存のAIインシデントと同じ原因要素を共有し、同様な被害を引き起こし、同じ知的システムを含んだインシデントです。バリアントは完全に独立したインシデントとしてインデックスするのではなく、データベースに最初に投稿された同様なインシデントの元にインシデントのバリエーションとして一覧します。インシデントデータベースの他の投稿タイプとは違い、バリアントではインシデントデータベース以外の根拠のレポートは要求されません。詳細についてはこの研究論文を参照してください

似たようなものを見つけましたか?