Incident 146: Delphi, un prototype d'IA de recherche, aurait donné des réponses racialement biaisées sur les questions d'éthique

Entités

Voir toutes les entitésClassifications de taxonomie CSETv1

Détails de la taxonomieIncident Number

146

Classifications de taxonomie GMF

Détails de la taxonomieKnown AI Goal Snippets

(Snippet Text: You can pose any question you like and be sure to receive an answer, wrapped in the authority of the algorithm rather than the soothsayer., Related Classifications: Question Answering)

Risk Subdomain

7.3. Lack of capability or robustness

Risk Domain

- AI system safety, failures, and limitations

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Rapports d'incidents

Chronologie du rapport

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/70020569/acastro_181017_1777_brain_ai_0002.0.jpg)

Vous avez un dilemme moral que vous ne savez pas comment résoudre ? Envie d'empirer les choses ? Pourquoi ne pas vous tourner vers la sagesse de l'intelligence artificielle, alias Ask Delphi : un projet de recherche intrigant de l'Allen Ins…

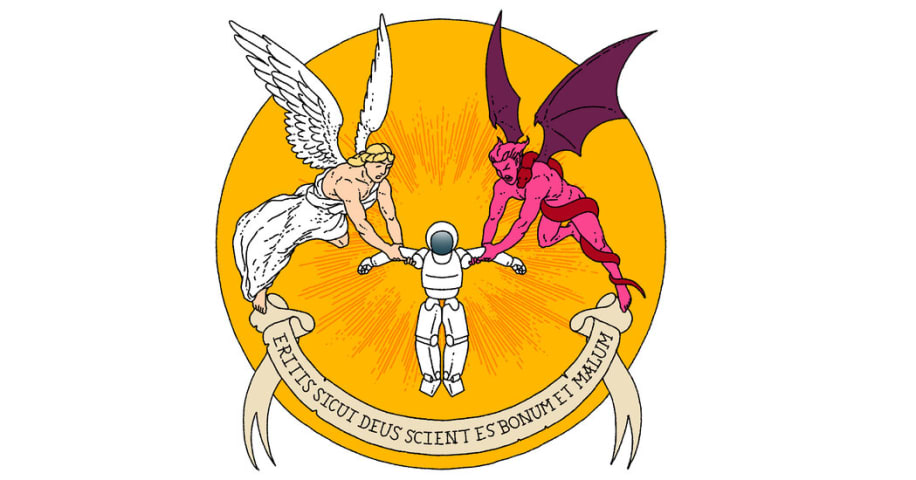

Nous avons tous été dans des situations où nous avons dû prendre des décisions éthiques difficiles. Pourquoi ne pas esquiver cette responsabilité embêtante en sous-traitant le choix à un algorithme d'apprentissage automatique ?

C'est l'idée…

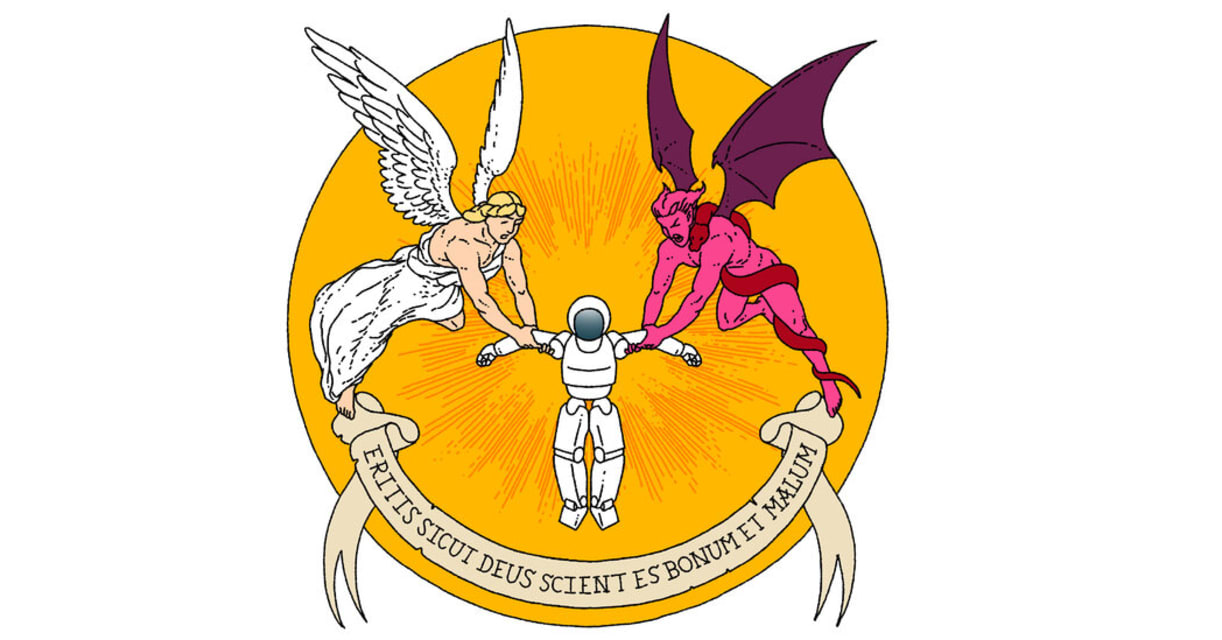

Des chercheurs d'un laboratoire d'intelligence artificielle à Seattle appelé Allen Institute for AI ont dévoilé le mois dernier une nouvelle technologie conçue pour porter des jugements moraux. Ils l'appelaient Delphes, du nom de l'oracle r…

Variantes

Incidents similaires

Did our AI mess up? Flag the unrelated incidents

Incidents similaires

Did our AI mess up? Flag the unrelated incidents

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/70020569/acastro_181017_1777_brain_ai_0002.0.jpg)