Incidente 758: La sobredosis de un adolescente estaría relacionada con la falla de los sistemas de inteligencia artificial de Meta al bloquear anuncios de drogas ilegales.

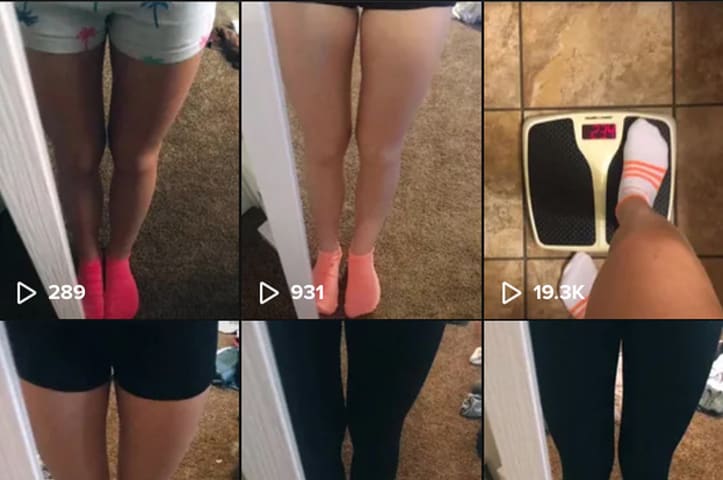

Descripción: Según informes, los sistemas de moderación de IA de Meta no lograron bloquear los anuncios de drogas ilegales en Facebook e Instagram, lo que permitió a los usuarios acceder a sustancias peligrosas. La falla del sistema está relacionada con la muerte por sobredosis de Elijah Ott, un joven de 15 años que buscaba drogas a través de Instagram.

Entidades

Ver todas las entidadesAlleged: Meta Platforms developed an AI system deployed by Meta Platforms , Instagram y Facebook, which harmed Instagram users , Facebook users y Elijah Ott.

Estadísticas de incidentes

Risk Subdomain

A further 23 subdomains create an accessible and understandable classification of hazards and harms associated with AI

7.3. Lack of capability or robustness

Risk Domain

The Domain Taxonomy of AI Risks classifies risks into seven AI risk domains: (1) Discrimination & toxicity, (2) Privacy & security, (3) Misinformation, (4) Malicious actors & misuse, (5) Human-computer interaction, (6) Socioeconomic & environmental harms, and (7) AI system safety, failures & limitations.

- AI system safety, failures, and limitations

Entity

Which, if any, entity is presented as the main cause of the risk

AI

Timing

The stage in the AI lifecycle at which the risk is presented as occurring

Post-deployment

Intent

Whether the risk is presented as occurring as an expected or unexpected outcome from pursuing a goal

Unintentional

Informes del Incidente

Cronología de Informes

Loading...

translated-es-Meta Platforms is running ads on Facebook and Instagram that steer users to online marketplaces for illegal drugs, months after The Wall Street Journal first reported that the social-media giant was facing a federal investigat…

Variantes

Una "Variante" es un incidente de IA similar a un caso conocido—tiene los mismos causantes, daños y sistema de IA. En lugar de enumerarlo por separado, lo agrupamos bajo el primer incidente informado. A diferencia de otros incidentes, las variantes no necesitan haber sido informadas fuera de la AIID. Obtenga más información del trabajo de investigación.

¿Has visto algo similar?

Incidentes Similares

Did our AI mess up? Flag the unrelated incidents

Incidentes Similares

Did our AI mess up? Flag the unrelated incidents