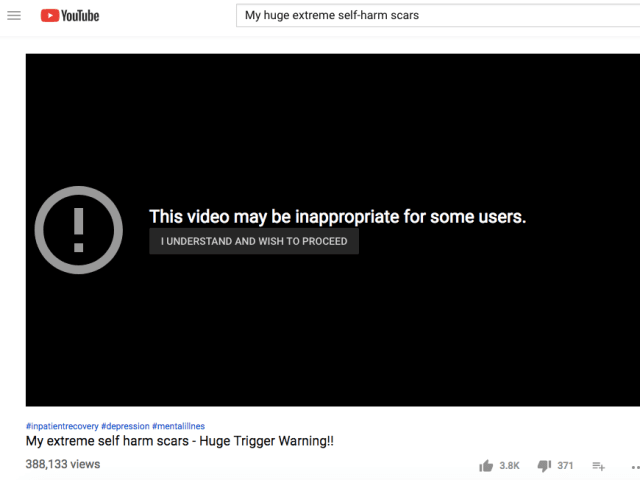

Description: An alleged Instagram algorithm malfunction caused users' Reels feeds to be overwhelmed with violent and distressing content. Many reported seeing deaths, extreme brutality, and other graphic material in rapid succession, often without prior engagement with similar content. The sudden exposure caused psychological distress for many users, with minors and vulnerable individuals particularly affected by the graphic content. Meta confirmed an AI failure was responsible and apologized.

Tools

New ReportNew ResponseDiscoverView History

The OECD AI Incidents and Hazards Monitor (AIM) automatically collects and classifies AI-related incidents and hazards in real time from reputable news sources worldwide.

Entities

View all entitiesAlleged: Meta , Instagram Reels and Instagram recommendation algorithm developed and deployed an AI system, which harmed minors , Meta users and Instagram users.

Alleged implicated AI systems: Instagram Reels and Instagram recommendation algorithm

Incident Stats

Incident ID

957

Report Count

1

Incident Date

2025-02-28

Editors

Dummy Dummy

Incident Reports

Reports Timeline

Loading...

Mark Zuckerberg’s Meta has apologised after Instagram users were subjected to a flood of violence, gore, animal abuse and dead bodies on their Reels feeds.

Users reported the footage after an apparent malfunction in Instagram’s algorithm, w…

Variants

A "variant" is an AI incident similar to a known case—it has the same causes, harms, and AI system. Instead of listing it separately, we group it under the first reported incident. Unlike other incidents, variants do not need to have been reported outside the AIID. Learn more from the research paper.

Seen something similar?

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Similar Incidents

Did our AI mess up? Flag the unrelated incidents