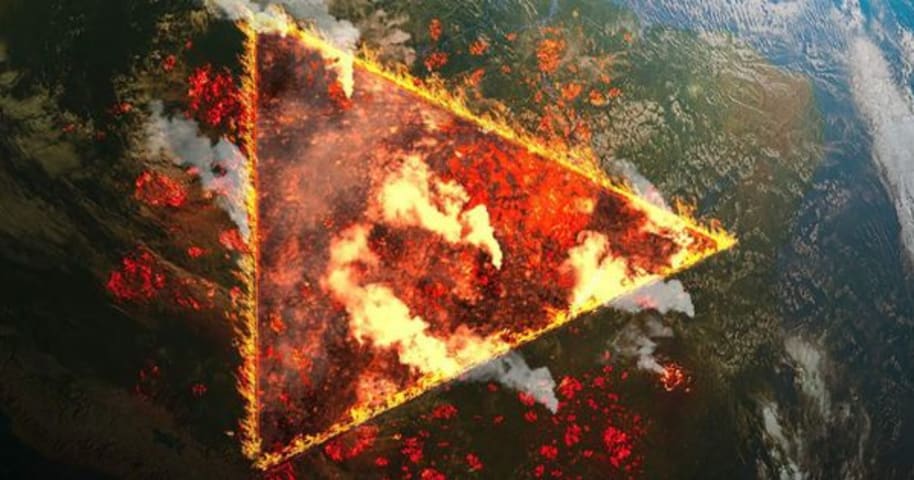

Description: In China, purported AI tools were reportedly used to fabricate and disseminate false reports of disasters, including a landslide in Yunnan, an earthquake in Sichuan, and a sudden death after a traffic incident. On May 27, 2024, a real 5.0-magnitude earthquake occurred in Muli County, Sichuan, with no casualties and limited property damage. However, a social media post later falsely claimed the epicenter was in Xide County and exaggerated the event's severity, adding fabricated images of extensive destruction. The deployers of these false reports have since received administrative penalties from Chinese authorities for their actions.

Editor Notes: Incident 835 presents multiple discrete AI-related incidents, each pointing to the wider application of AI tools to fabricate events and spread misinformation on social media throughout China, similar to Incident 834. Anonymous names like "Moumou" are used to preserve privacy in reporting. The following is a reconstruction of the timeline of events associated with this incident ID: (1) 1/23/2024, a fabricated landslide incident in Yunnan: Yang Moumou allegedly used AI tools to create and share a fake news article claiming that a landslide in Yunnan had resulted in eight deaths. That misinformation was posted on social media to attract views and engagement. Authorities identified the incident as AI-driven misinformation, and Yang was subsequently given an administrative penalty. (2) 5/27/2024, a real 5.0-magnitude earthquake occurred in Muli County, Sichuan. It resulted in minor property damage but no casualties. However, this actual event would later become the basis for an exaggerated AI-fabricated narrative. (3) 6/9/2024, a fabricated earthquake report in Xide County, Sichuan: Luo Moumou reportedly used AI to enhance and circulate a false report about the May 27 earthquake, falsely claiming the epicenter was in Xide County, Sichuan, with severe property damage and casualties. The fabricated images and videos spread quickly on social media, which led to confusion and alarm. Luo received an administrative penalty for disseminating false information. (4) Sometime in June 2024, a fabricated fatal traffic incident in Chengcheng County: Tian Mou used AI to create and post a story claiming that a middle-aged woman in Chengcheng County suffered a fatal heart attack after her electric scooter was impounded during a traffic stop. The narrative was designed to generate an emotional response for the purposes of driving engagement, but it misled the public. Tian admitted to fabricating the story using AI and was issued an administrative penalty.

Entities

View all entitiesAlleged: Unknown deepfake technology developer and Unknown AI developers developed an AI system deployed by Yang Moumou , Tian Mou and Lou Moumou, which harmed Yunnan general public , Xide County in Sichuan residents , Sichuan general public , Muli County in Sichuan residents , Chinese general public , Chinese citizens and Chengcheng County Shaanxi residents.

Alleged implicated AI system: Unknown generative AI technology

Incident Stats

Risk Subdomain

A further 23 subdomains create an accessible and understandable classification of hazards and harms associated with AI

3.1. False or misleading information

Risk Domain

The Domain Taxonomy of AI Risks classifies risks into seven AI risk domains: (1) Discrimination & toxicity, (2) Privacy & security, (3) Misinformation, (4) Malicious actors & misuse, (5) Human-computer interaction, (6) Socioeconomic & environmental harms, and (7) AI system safety, failures & limitations.

- Misinformation

Entity

Which, if any, entity is presented as the main cause of the risk

Human

Timing

The stage in the AI lifecycle at which the risk is presented as occurring

Post-deployment

Intent

Whether the risk is presented as occurring as an expected or unexpected outcome from pursuing a goal

Intentional

Incident Reports

Reports Timeline

Loading...

In recent years, information technology represented by big data and artificial intelligence (AI) has been changing with each passing day. At the same time, the use of AI tools to synthesize technology to fabricate rumors and even generate p…

Variants

A "variant" is an AI incident similar to a known case—it has the same causes, harms, and AI system. Instead of listing it separately, we group it under the first reported incident. Unlike other incidents, variants do not need to have been reported outside the AIID. Learn more from the research paper.

Seen something similar?

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Similar Incidents

Did our AI mess up? Flag the unrelated incidents