Entities

View all entitiesCSETv0 Taxonomy Classifications

Taxonomy DetailsProblem Nature

Robustness, Assurance

Physical System

Other:Medical system

Level of Autonomy

Low

Nature of End User

Expert

Public Sector Deployment

No

Data Inputs

Surgeon's directions, medical procedures

CSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

5

AI Tangible Harm Level Notes

No evidence that robots used AI. Robots are guided by surgeons to make precise cuts.

Special Interest Intangible Harm

no

Notes (AI special interest intangible harm)

Only tangible, no intangible, harm was reported

Date of Incident Year

2000

Estimated Date

Yes

Risk Subdomain

7.3. Lack of capability or robustness

Risk Domain

- AI system safety, failures, and limitations

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

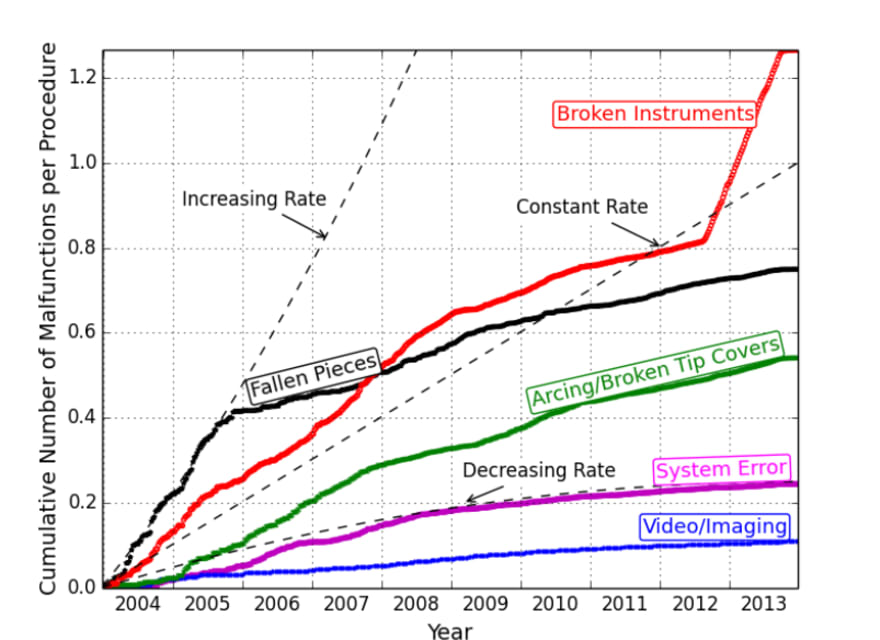

Importance: Understanding the causes and patient impacts of surgical adverse events will help improve systems and operational practices to avoid incidents in the future.

Objective: To determine the frequency, causes, and patient impact of a…

Copyrig ht © 2015: Author s. !

21

Appendix

Underre porti ng

The underr eportin g in da ta col lection is a fairly common prob lem in social science s, publ ic heal th, c riminolo gy, and

microe conomi cs. It occur s whe n the coun ting of s…

Robotic surgeons were involved in the deaths of 144 people between 2000 and 2013, according to records kept by the U.S. Food and Drug Administration. And some forms of robotic surgery are much riskier than others: the death rate for head, n…

All surgery carries risk, and that’s also true when it involves robots. A new study of U.S. Food and Drug Administration data reveals that a variety of malfunctions have been linked to 144 deaths during robotic surgery in the last 14 years.…

Close

The use of robotic systems for some forms of surgery is still a relatively new area, but they have been in use long enough for researchers from MIT, the University of Illinois at Urbana-Champaign, and Rush University Medical Center in…

Breaking News Emails Get breaking news alerts and special reports. The news and stories that matter, delivered weekday mornings.

July 21, 2015, 4:04 PM GMT / Updated July 21, 2015, 7:53 PM GMT By Keith Wagstaff

Robotic surgery is on the ris…

The Food and Drug Administration keeps meticulous records concerning instances of medical devices, including robots, malfunctioning or acting in ways that they aren’t supposed to.

Those records are stored in the Manufacturer and User Facili…

MIT Technology Review: According to a recent study, most of the robotic surgical procedures performed over the past 14 years have gone smoothly. However, a significant number have suffered some sort of adverse event, even if it did not resu…

An independent analysis of reports gathered by the U.S. Food and Drug Administration since 2000 shows that robotic surgery isn’t as safe as some people might assume.

Surgery involving robots, where a surgeon guides the steady and precise mo…

Image copyright Science Photo Library Image caption Surgical robots allow doctors to improve recovery time and minimise scarring

A study into the safety of surgical robots has linked the machines' use to at least 144 deaths and more than 1,…

Surgery on humans using robots has been touted by some as a safer way to get your innards repaired – and now the figures are in for you to judge.

A team of university eggheads have counted up the number of medical cockups in America reporte…

A series of reports submitted to the U.S. Food and Drug Administration since 2000 were analyzed and it was found that robotic surgeries are not safe after all.

In recent years, the use of surgical robots in the medical community has increas…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Similar Incidents

Did our AI mess up? Flag the unrelated incidents