Tools

Entities

View all entitiesIncident Stats

Risk Subdomain

4.3. Fraud, scams, and targeted manipulation

Risk Domain

- Malicious Actors & Misuse

Entity

Human

Timing

Post-deployment

Intent

Intentional

Incident Reports

Reports Timeline

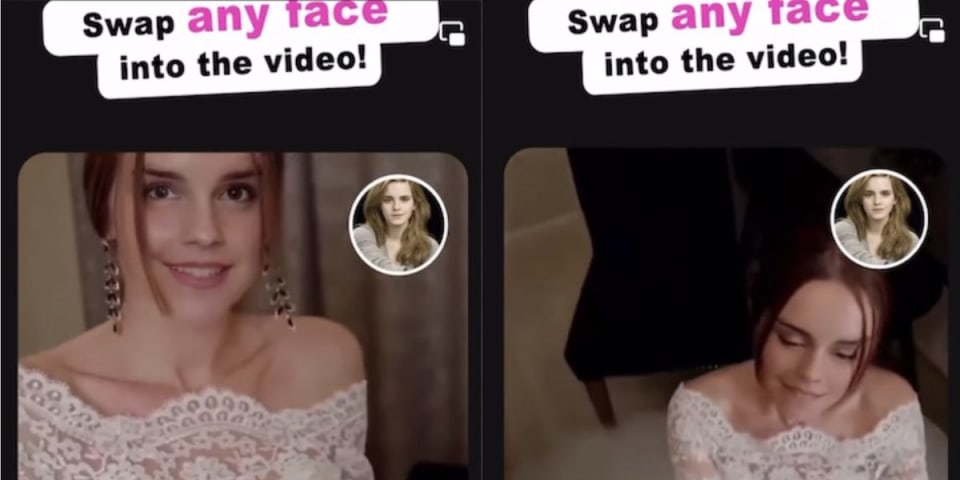

Looking at the video of a deep fake’d Emma Watson above, you can see how realistic the technology is.

Seriously, I went to the critically-acclaimed ABBA holographic concert in London recently and the lifelike movements in that clip are stri…

In a Facebook ad, a woman with a face identical to actress Emma Watson’s face smiles coyly and bends down in front of the camera, appearing to initiate a sexual act. But the woman isn’t Watson, the “Harry Potter” star. The ad was part of a …

Earlier this week, an NBC report unearthed a celebrity face-swapping app, Facemega, with the potential to easily create deepfake porn depicting famous or public-facing women. Deepfake porn refers to fake but highly realistic, often AI-gener…

Multiple online stores and Meta have removed a controversial face swap app that promoted a sexually suggestive ad featuring the face of the "Harry Potter" actor Emma Watson imposed onto someone else.

The app for creating deepfakes, called …

In the ad, a woman in a white lace dress makes suggestive faces at the camera, and then kneels. There’s something a bit uncanny about her; a quiver at the side of her temple, a peculiar stillness of her lip. But if you saw the video in the …

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Deepfake Obama Introduction of Deepfakes

FaceApp Racial Filters

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Deepfake Obama Introduction of Deepfakes