Entities

View all entitiesIncident Stats

Risk Subdomain

7.3. Lack of capability or robustness

Risk Domain

- AI system safety, failures, and limitations

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

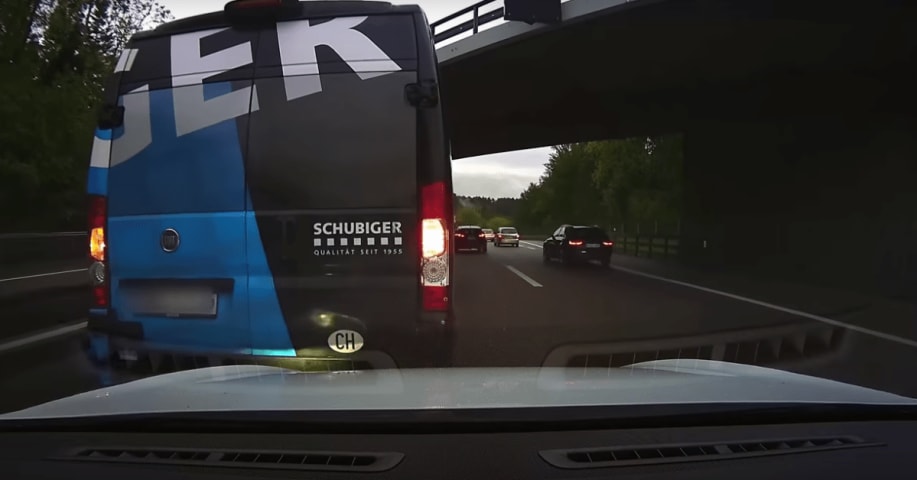

A Tesla Model S driver published a video of his car crashing into a van while on Autopilot which acts as a great PSA to remind Tesla drivers not to always rely on the Autopilot and be ready to take control at all time. In this particular ca…

Just to make it clear: The Tesla Model S is the absolute best car in the world at the moment. Nothing comes close.

But, in this case there was a problem with the driving aids and also security systems: None of the safety-systems worked corr…

Within a week of Tesla releasing Autopilot to the masses last fall, we started seeing some generally scary videos of people putting a little too much trust in the system. Well, we're continuing to see them, and in the latest video we sadly …