Tools

Entities

View all entitiesRisk Subdomain

7.3. Lack of capability or robustness

Risk Domain

- AI system safety, failures, and limitations

Entity

AI

Timing

Pre-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

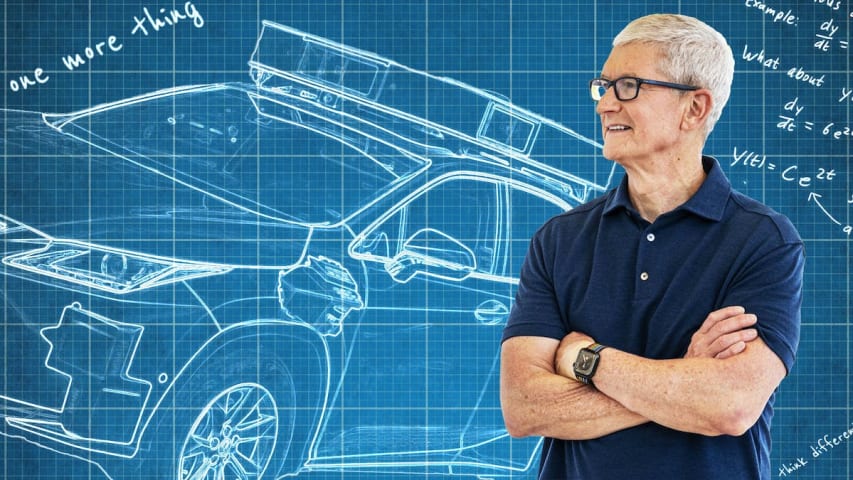

Apple’s self-driving cars had trouble navigating streets, frequently bumped into curbs and veered out of lanes in the middle of intersections during test drives near the company’s Silicon Valley headquarters, according to a report.

Apple ha…

Last August, Apple sent several of its prototype self-driving cars on a roughly 40-mile trek through Montana. Aerial drones filmed the drive, from Bozeman to the ski resort town of Big Sky, so that Apple managers could produce a polished fi…

Several of the automotive and technology industry's biggest names are racing to get the first self-driving car on the road, so it's no surprise Apple, the world's most valuable technology company, has thrown its hat into the ring. Although …

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Similar Incidents

Did our AI mess up? Flag the unrelated incidents