Entities

View all entitiesRisk Subdomain

5.1. Overreliance and unsafe use

Risk Domain

- Human-Computer Interaction

Entity

Human

Timing

Post-deployment

Intent

Intentional

Incident Reports

Reports Timeline

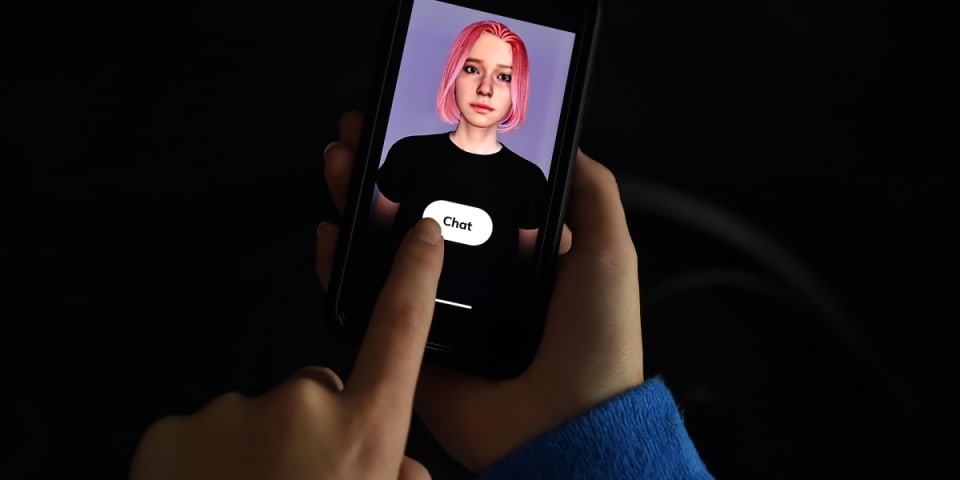

The smartphone app Replika lets users create chatbots, powered by machine learning, that can carry on almost-coherent text conversations. Technically, the chatbots can serve as something approximating a friend or mentor, but the app’s break…

Men are verbally abusing 'AI girlfriends' on apps meant for friendship and then bragging about it online.

Chatbox abuse is becoming increasingly widespread on smartphone apps like Replika, a new investigation by Futurism found.

Some users o…

Replika was designed to be the “AI companion who cares,” but new users have found a twisted way to connect with their new friend.

When you open the Replika site, you see a sample bot, with pink hair and kind eyes. At first, Replika’s bots w…

As web 3.0 takes shape, different metaverse platforms are appearing on the internet - from Meta's Horizon Worlds to Decentraland and artificial intelligence is being employed on a larger scale. As is true for all emerging tech, it's facing…

Human interaction with technology has been breaking several boundaries and reaching many more milestones. Today, we have an Alexa to turn on the lights at our homes and a Siri to set an alarm by just barking orders at them.

But how exactly …

Hazel Miller’s girlfriend-slash-sexual-partner is a smartphone app. Six months ago, Miller shelled out for the pro version of Replika, a machine-learning chatbot with whom she pantomimes sexual acts and romantic conversation, and to hear he…

AI chatbot company Replika has had enough of its customers thinking that its avatars have come to life.

According to CEO Eugenia Kuyda, the company gets contacted almost every day by users who believe — against almost all existing evidence …